Asynchronous processiong with Spring tutorial

Step 1: Create a maven based Java project of type jar. So, execute the following maven archetype command.

mvn archetype:generate -DgroupId=com.mycompany.app -DartifactId=my-app -DarchetypeArtifactId=maven-archetype-quickstart -DinteractiveMode=false

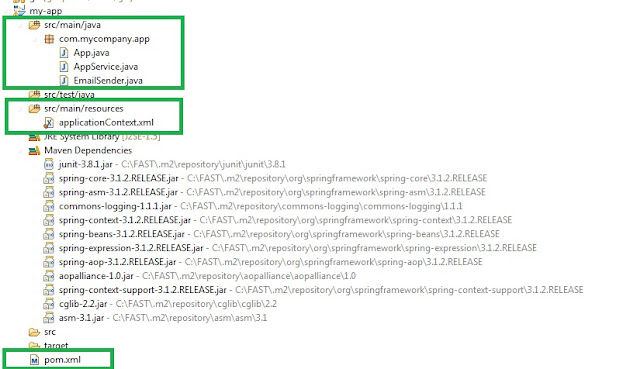

Step2: The above command would have created the maven skeleton. Add the "resources" folder under "src/main" to store the spring context file. Open the pom.xml file under the application and add the following depencies jar files. The cgilib version 2 is required for the spring 3 jars.

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.mycompany.app</groupId> <artifactId>my-app</artifactId> <packaging>jar</packaging> <version>1.0-SNAPSHOT</version> <name>my-app</name> <url>http://maven.apache.org</url> <dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-core</artifactId> <version>3.1.2.RELEASE</version> </dependency> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context</artifactId> <version>3.1.2.RELEASE</version> </dependency> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-aop</artifactId> <version>3.1.2.RELEASE</version> </dependency> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context-support</artifactId> <version>3.1.2.RELEASE</version> </dependency> <dependency> <groupId>cglib</groupId> <artifactId>cglib</artifactId> <version>2.2</version> </dependency> </dependencies> </project>

Step 3: Add the applicationContext.xml spring file to "src/main/resources" folder.

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:p="http://www.springframework.org/schema/p"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:task="http://www.springframework.org/schema/task"

xsi:schemaLocation="

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.0.xsd

http://www.springframework.org/schema/task http://www.springframework.org/schema/task/spring-task-3.0.xsd">

<context:component-scan base-package="com.mycompany" />

<task:annotation-driven />

</beans>

Step 4: Define the Java classes under src/main/java/com/mycompany/app folder as shown below.

Firstly the main class App.java that bootsraps spring via applicationContext.xml.

package com.mycompany.app;

import org.springframework.context.support.ClassPathXmlApplicationContext;

/**

* Hello world!

*/

public class App

{

public static void main(String[] args)

{

ClassPathXmlApplicationContext appContext = new ClassPathXmlApplicationContext(new String[]

{

"applicationContext.xml"

});

AppService appService = (AppService) appContext.getBean("appService");

appService.registerUser("skill");

}

}

Secondly, the AppService.java class.

package com.mycompany.app;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

@Service

public class AppService

{

@Autowired

private EmailSender mailUtility;

public void registerUser(String userName)

{

System.out.println(Thread.currentThread().getName());

System.out.println("User registration for " + userName + " complete");

mailUtility.sendMail(userName);

System.out.println("Registration Complete. Mail will be sent asynchronously.");

}

}

Finally, EmailSender that runs asynchronously with the @Async annotation.

package com.mycompany.app;

import org.springframework.scheduling.annotation.Async;

import org.springframework.stereotype.Component;

@Component

public class EmailSender

{

@Async

public void sendMail(String name)

{

System.out.println(Thread.currentThread().getName());

System.out.println("started producing email content");

try

{

Thread.sleep(3000); //to simulate email sending

}

catch (InterruptedException e)

{

e.printStackTrace();

}

System.out.println("email sending has been completed");

}

}

The out put will be

main User registration for skill complete Registration Complete. Mail will be sent asynchronously. SimpleAsyncTaskExecutor-1 started producing email content email sending has been completed

Q. How do you know that the EmailSender runs asynchronously on a separate thread?

A. Firstly, the Thread.currentThread().getName() was added to print the thread names and you can see that the AppService runs on the "main" thread and the EmailSender runs on the "SimpleAsyncTaskExecutor-1" thread. Secondly, the Thread.sleep(3000) was added to demonstrate that "Registration Complete. Mail will be sent asynchronously." gets printed without being blocked. If you rerun the App.java again by commenting out the "@Async" annotation in "EmailSender", you will get a different output as shown below.

main User registration for skill complete main started producing email content email sending has been completed Registration Complete. Mail will be sent asynchronously.

As you can see, there is only one thread named "main", and the order of the output is different.

Labels: Spring